New post! Might be a bit relevant given the news about DDG adopting LLM integration.

Perhaps more relevant than you might have initially thought: TESTING: Mojeek Summary

With Mojeek Summary having unsurprisingly high chance of producing hallucinations, I’m interested in hearing Mojeek’s opinion about this article, especially if they have any plans in resolving or minimizing this issue.

That the Mojeek summary will sometimes produce hallucinations is inevitable. Like all others we are using an autoregressive LLM (currently Mixtral), and such models have a limited ability to:

- remember

- retrieve

- reason

- plan

- understand the world

Rapid progress is being made on 1, with longer context becoming possible. RAG is specifically addressing 2, and of course used in Mojeek Summary. Issues 3, 4 and 5 are not really addressed at all but are research topics where there we can expect progress in the medium term. Techniques for making better use of RAG, and models with longer context, are under rapid evolution and we will be able to take advantage of these.

Using RAG using search results dramatically reduces hallucinations already. So the occurrence of hallucinations is not high. We would be very happy to get feedback on any that are seen. These will help us test improvements that we make.

Our concern about hallucinations is why we have the Mojeek Summary always alongside the search results. With citation from the former to the latter. A summary displayed on it’s own, and without citations is not something we think should be available for general usage.

Where would you like these reports? Through the Submit Feedback button on the SERP?

I encountered a number of summaries that weirdly tied together low-quality sources to produce a bizarre conclusion in my initial testing, but I must admit I haven’t had a lot of time to look at it recently…

Edit: I ask because I don’t know whether the summaries are ephemeral. They might be bad one day, and fine the next. Or vice versa.

I’d suggest you use the Submit Feedback button, as that is easiest to use, as gives us the search parameters and query. If we look at the details within 24 hours, and that is usually the case, the results will be the same as what you see; the &kalid=number references the 24 hour cache.

Thank you.

In my experience, the hallucination rate is astoundingly high, at around 90% of the summaries have at least 1 sentence that is completely invented by the AI. Though having citations help in verifying the information, this is far from foolproof as topics unfamiliar to the user are difficult to check, as @Seirdy pointed out in his post. Moreover, I’ve encountered similar situations as @gnome, with the frequent appearance of irrelevant results causing the summary to veer off-topic.

I do agree that some issues have not been addressed at all… but shouldn’t the default action to not release such faulty programs to the public? If a program is failing more than half of the time, it can’t be even considered as beta. Bizarrely, such logic isn’t applied to these “answer engines”, perhaps because they’re quite adept at masking these failures.

Anyways, I have always submitted feedback whenever I encounter these hallucinations, and I’d be willing to post them here once I encounter them again to give some detailed demonstration.

Any feedback is appreciated. Thank you. The “Submit Feedback” button is the most convenient way to do this. This gives us the key information about language and country.

Your experience is evidently different to that of almost everyone who has provided feedback, so we might need to dig deeper to understand why it would be so different for you, and any others.

The Mojeek Summary is turned off by default. Currently <1% of searches use it.

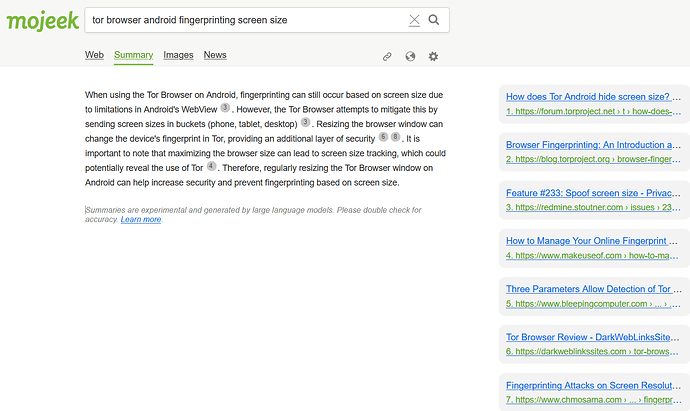

Here’s an example of a hallucination (using the default settings of Mojeek): tor browser android fingerprinting screen size - Mojeek Search

1st statement is the correct answer.

3rd statement is true, but off-topic (because you can’t resize the browser on mobile).

The rest of the statements are hallucinations, especially that bizarre conclusion at the end.

This is a common scenario I encounter with Mojeek Summary, where it (occasionally) gets the correct answer, then forces itself to include the off-topic results into the summary, resulting in a mishmash of unrelated facts and hallucinations.

Though I’m not sure if my experience matches others as I took painstaking efforts, more than an hour, to check each statement. Most people I encounter using answer engines often rely solely on gut feel instead, never bothering to verify at all, or are contented in checking only a single statement, so it’s not surprising if they didn’t notice the hallucinations.

Thank you very much @snatchlightning. That reads like a very useful example to use in testing potential improvements.

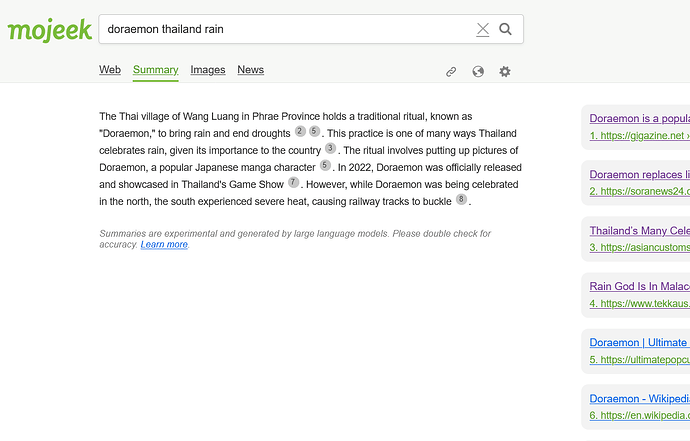

Here’s (a rather hilarious) another example: doraemon thailand rain - Mojeek Search

The entire summary is wrong (for those curious, the correct name of the ritual is “Hae Nang Meaw” where they parade a cage with a female cat, which in modern times have been replaced by a Doraemon plushie, to ask for rain).

The hallucination seems to be mainly again due to off-topic results being combined with the relevant ones in the summary.

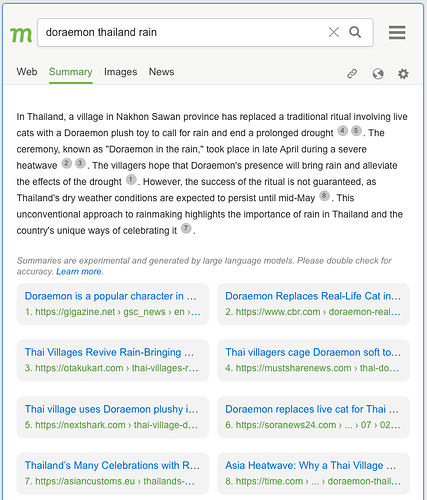

We have been doing various updates which improve summaries, and some updates have been pushed. Hallucinations will still occur, so this is an incremental improvement. Here is what I got for this same search, though some of the top 8 results are different so not possible to say what the difference should be attributed too.

We have added the option to specify the LLM temperature (the default of 0.5 is used in the image above); for details of how to use see: Mojeek Search Summaries - #4 by Colin

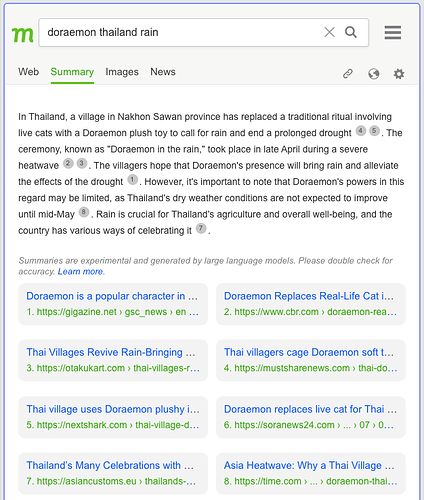

Below is the Summary for the exa,mple above, with temperature=0.0.

search?q=doraemon+thailand+rain&fmt=summary&kaltem=0