We have added a parameter &kaltem, so that you can change the LLM (Large Language Model) temperature setting in the URL. The current default is 0.5, and can be changed to for example 1.0 like this.

Mojeek =Should+AI+be+open+source%3F&fmt=summary&kaltem=1.0

A lower value makes the model’s responses more deterministic, predictable, and consistent. A higher value makes the responses more diverse and random. The range yoi can use if 0.0 to 1.0.

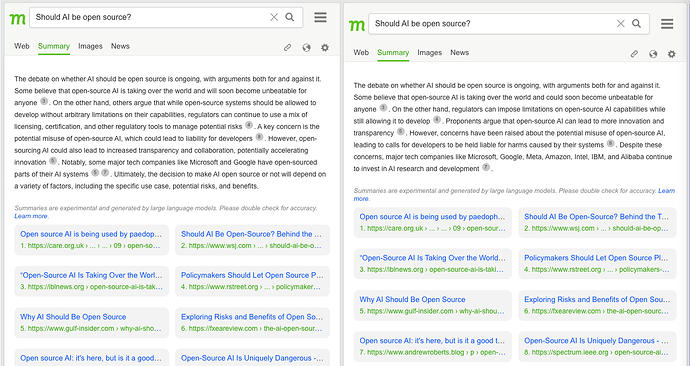

The example in the image, on the left has &kaltem=0.0, and on the right &kaltem=0.5