Artificial intelligence is the simulation of cognitive processes by machines, a concept coined by John McCarthy in 1956 to define a new field aimed at replicating human-like intelligence through artificial means. Intelligence in the conventional sense is the cognitive ability of humans and animals to learn, understand, and solve problems.

If you take the view that machine can “think” and perform tasks that would require intelligence if done by humans, artificial intelligence has long been around. Personally I think (pun intended) that we when we talk about human intelligence we shouldn’t drop the word human. Intelligence is a much broader field that encapsulates human, animal, artificial and other intelligences. We are again making the mistake as viewing ourselves as the centre of things, just as we did when we thought the Sun revolved the Earth. All the references to AGI (Artificial General Intelligence) are unhelpful, and often used by hype-masters, so let’s not get into that. Instead I think it will be helpful to look at how we got to where we are. We cannot predict where we are headed and neither can AI, thankfully, but historical context can help look at challenges in a less tribal way. What follows is my own context and perspective.

Much of the earlier development of AI, notably through to the 1980s was characterised by usage of symbolic reasoning, logic-based methods, and rule-based systems to simulate aspects of human intelligence. It’s known as GOFAI (Good Old-Fashioned Artificial Intelligence). I dabbled in that myself back in the day, but in the 80s and 90s my early career focussed on HPC (High-Performance Computing). HPC started with supercomputers and often used parallel processing techniques to solve complex computational problems that require a vast amount of processing power. HPC has been used in fields such as scientific simulations, weather forecasting, molecular modelling, and big data analysis for decades. I suppose I have a reasonable claim to be one the main people adapting and developing HPC algorithms, for solid and fluid dynamics simulations, and porting them to PCs. Such computations are (still) difficult to port to GPUs, for several technical reasons, so my own experience (outside developing visualisation tools) was largely with CPUs.

The rise of AI we see today refers to ML (Machine Leaning) and its subset Deep Learning. The difference here from GOFAI, and indeed HPC, is that the learning algorithms are derived from data. And today, of course, there is a lot more data around! In the 1990s it was realised that ML models, particularly neural networks, improved significantly as the size of the training data increased. And in 1995, Vapnik’s Statistical Learning Theory provided a theoretical framework for understanding the relationship between data size, model complexity, and generalization performance. His theory highlighted that with more data, a model could achieve better generalization, assuming the model is complex enough to capture the underlying patterns in the data.

In the 2010s deep learning models like convolutional neural networks (CNNs) and recurrent neural networks (RNNs) demonstrated remarkable performance on tasks such as image recognition, speech recognition, and language translation, but this performance was heavily dependent on the availability of large-scale data. The breakthrough moment came with the AlexNet model, which won the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) in 2012. AlexNet, a deep convolutional neural network, was trained on millions of labeled images from the ImageNet dataset. The success of AlexNet was a clear demonstration that large-scale data, combined with powerful models and computational resources (GPUs), could significantly improve the performance of machine learning systems. AlexNet was developed by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton; you might have heard of them! This above all was the “Aha moment” for AI researchers and indeed numerous investors and entrepreneurs like myself.

Personally speaking I got deeply involved in AI during 2014 to 2019 at the University of Sussex supporting researchers and developing projects in ML based on the vast amounts of data coming from astronomy and partical physics experiments. I also worked on quantum computers there, but that is another story. More relevantly these groups were also developing techniques in Bayesian inference, which is a statistical method that has played a significant role in the development of various machine learning techniques. In Bayesian inference theoretical and empirical models can be used and then combined with machine learning to handle uncertainties, probabilistically. Bayesian methods don’t require anything like as much data as deep leaning, so we spun-out a company to apply these techniques to challenges for SMEs with smaller and more specialised datasets, in 2019.

Meanwhile the next big breakthrough in AI was the Transformers paper of 2017, called “Attention is All You Need” published by Vaswani et al. at Google. The Transformer architecture has since revolutionized the field of natural language processing (NLP) and has kickstarted the current hype-wave seen with LLMs (Large Language Models) and other forms of Generative AI. Anyone like me who knew about that paper was confident that AI would see a big surge, the only uncertainty was when. It came earlier than almost anyone expected. And whilst OpenAI dominated the mainstream press, with the breakout of ChatGPT in late 2022, there were others already with projects shipped, and/or shelved well before that. We became aware aware of, and/or involved in these during 2021 at Mojeek, and after I joined in 2020.

At Mojeek we have been following these recent developments in AI closely since. We use and develop typically small and efficient ML models for various tasks. And as you may know we use an open source LLM (Mistral) for Mojeek summaries, applying RAG (Retrieval-Augmented Generation) using the Mojeek search results. As it happens the Mojeek API was used by FAIR who pioneered RAG back, in 2021 so we are well aware of how this is used, and how it plays a massive if largely unseen part of Generative AI products nowadays.

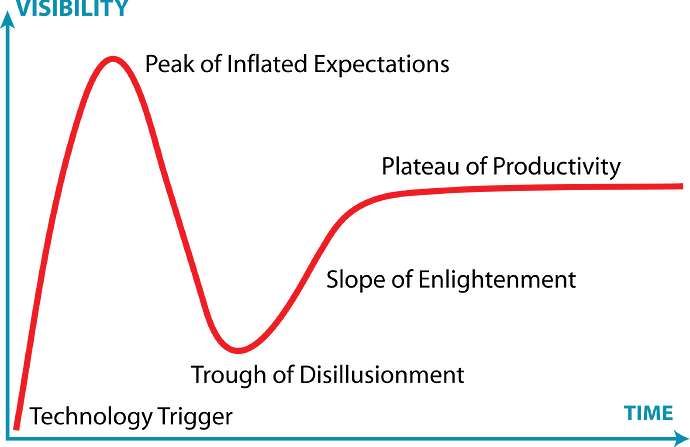

Where are we heading? Yes, there is a hype wave, and we are now past the peak. And in my opinion there is plenty of utility already showing, particularly in enterprise products, so we won’t see the bubble burst. It will likely, and arguably is, deflating. And sadly some of the “alternative” AI companies are being swallowed up by Big Tech; Infection into Microsoft and Character into Google. Yes there are all sort of issues that should concern us; hallucinations, copyright, data “theft”, rampany data harvesting, and further privacy erosions.

AI “progress” is best seen as a long curve of development, and so I hope this longer than expected post helps with that. Thanks for reading this far, if you did.