how is (re)crawl frequency and site ranking determined?

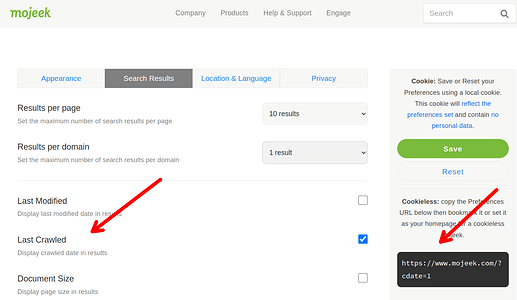

I don’t have an official answer. But I can offer some related information. You can tell the last time a website was crawled by changing a user preference.

Preferences

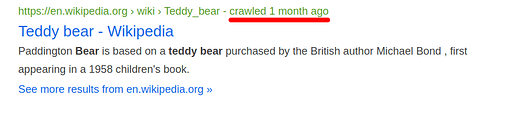

Example

This is a very difficult one to adequately outline in a forum thread, we bring together a very wide range of different factors to determine ranking, making it a complex question to answer; for the other part of the question though

Crawl frequency is determined by how often we see changes to a page, coupled with the page’s authority score. A very simplified explanation of the process would be that we see if it has changed since we last crawled it, and if it has then this would spark crawling it more often; inversely, if it hasn’t changed, then crawl it less often. Over time this process gets more and more accurate, but with billions of pages there will always be bugs that cause pages not to be crawled as much as we’d like to. We are working hard to gradually get rid of those and therefore get to a place where most pages are crawled/recrawled within reasonable times.

A setting I have on all the time, thanks for flagging ![]()

thanks Josh - i asked the questions because of the last crawl dates for my site which is updated frequently and then comparing those times with other personal/blog type sites - i’ll pm you the domain if you care to have a peek