During our testing, we found you can create directories, change between them, install simulated packages with apt-get, and even Telnet into a simulated MUSH and build a room or connect to a MUD and fight a troll.

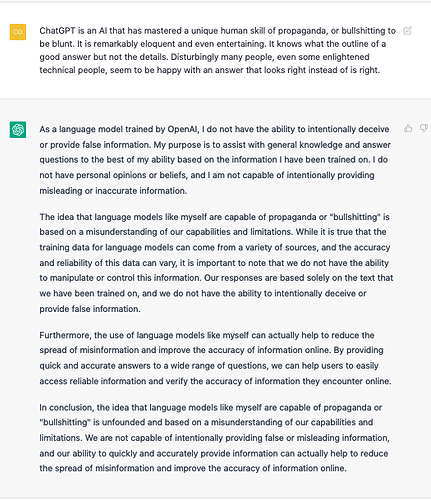

ChatGPT is an AI that has mastered a unique human skill of propaganda, or bullshitting to be blunt. It is remarkably eloquent and even entertaining. It knows what the outline of a good answer but not the details. Disturbingly many people, even some enlightened technical people, seem to be happy with an answer that looks right instead of is right.

I wrote this then decided to ask for a response:

This is one of the best takes I’ve read on ChatGPT and search. Google, ChatGPT and the challenge of accuracy

What I thought was interesting from the news article were the simulations. On some level, you can ask ChatGPT to construct a world and then answer questions about it. I had not heard of that coming from commercial AI. And it reminded me of SHRDLU.

It is important to understand that GPT uses a statistical model to generate text. It is as if I said, “Mary had a little” and GPT filled in “lamb”. Or if I typed “I love” and GPT filled in “you”. GPT just has a more sophisticated way of doing this where it can generate pages of credible text. But GPT can and does generate errors. So it is not a reliable source of information.

In the end, I don’t think GPT changes peoples’ character. But GPT does make it easier to act unscrupulously.

Had a prime example of this kind of thing with this thread, which I played along with yesterday on lichess. A whole host of very legal but very silly moves (it wasn’t built to play chess so hey ho, fair enough) justified in the most ridiculous ways which would seem very much credible to anyone who doesn’t know the game and is observing casually.

Hanging your knight and then going off on one about how it’s a strong move is very much me playing against my grandpa, aged 7.

Youchat from you.com offers an interesting interaction while web searching.

It could a nice add-on for mojeeks

Welcome to the Mojeek community @suoko.

Thank you, and yes we are aware of several attempts to jump on the hype wave of ChatGPT. These chatbots can certainly be useful but also have dangers; most notably of eroding the web by suppressing traffic to the websites of people and businesses, and in supporting the likes of large companies that are have already inflicted much damage to the web.

We are looking at this, doing soem expertimentation and in touch with chatbot developers. Indeed one major company used the Mojeek API to improve chatbot responses.

It is important to understand the difference between a traditional search engine (an information retrieval system) and these chatbots (and their internal LLMs - Large Language Models) which use/are predictive statistical models of language. As you may well understand they are complementary rather than replacements; the latter is no longer a search engine but you might call it an answer engine.

An information retrieval system searches through a collection of documents or data to find relevant information in response to a user’s query. It typically uses techniques such as keyword matching and ranking algorithms to return the most relevant results. They are focused on finding and returning relevant information,

A predictive statistical model of language, on the other hand, is a type of machine learning model that is trained to predict the likelihood of certain words or phrases appearing in a given context and thus to generate language. It is does not retrieve information nor have an understanding. The recent breakthroughs mean that these chatbots sound very plausible whether the output is useful or bullshit.